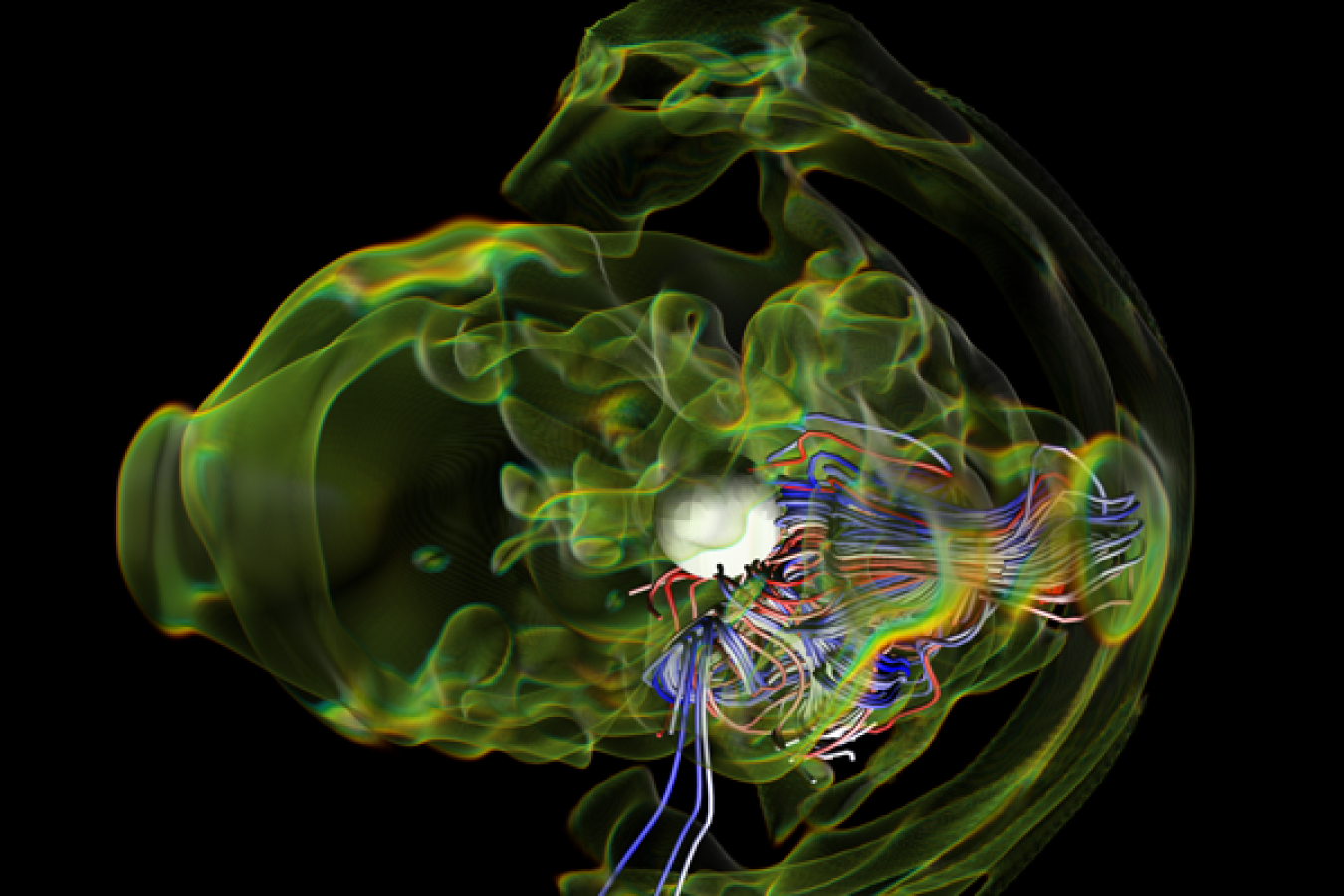

Scientific computing, including modeling, simulation and artificial intelligence, coupled with traditional theoretical and experimental approaches, enables breakthrough scientific discoveries and pushes innovation forward. As scientific modeling and simulation become more complex and ambitious, high-performance computing (HPC), commonly known as supercomputing, provides the invaluable ability to perform these complex calculations at high speeds. Supercomputers along with advances in software, algorithms, methods, tools and workflows equip researchers with powerful tools needed to study systems that would otherwise be impractical, or impossible, to investigate by traditional means due to their complexity or the danger they pose.

The Advanced Scientific Computing Research (ASCR) program leads the nation and the world in supercomputing, advanced networking and state-of-the-art research in computer science, mathematics and computational science. ASCR’s coordinated research efforts directed at exploiting the incredible power of HPC allow the scientists to solve the Nation’s most pressing grand challenge problems in energy, climate change and human health. These discoveries will help shape our understanding of the universe, bolster US economic competitiveness, and contribute to a better future.

Maintaining U.S. leadership in the fierce international competition requires computer scientists, applied mathematicians and computational scientists who know how to develop tools and methods to harness supercomputers to solve complex problems today and develop the technology of the future. Supercomputers push the edge of what is possible for US science and innovation—enabling scientists and engineers to explore systems too large, too complex, too dangerous, too small, or too fleeting to do by experiments. From atoms to astrophysics understanding these complex systems delivers new materials, new drugs, more efficient engines and turbines, better weather forecasts, and other technologies to maintain U.S. competitiveness in a wide array of industries.

We support U.S. research at hundreds of institutions and deploy open-access supercomputing and network facilities at our National Laboratories. Our supercomputers are some of the world’s most powerful, and our high-speed research network is specially built for moving enormous scientific data, at light speed. From artificial intelligence to quantum computing, our vibrant research community keeps the U.S. ahead in a rapidly evolving high-tech field and impacted industries. Through strong partnerships with the industry and interdisciplinary collaborations between domain scientists, applied mathematicians and computer scientists, we maintain U.S. leadership in science, technology, and innovation.

Learn more about the mission of Advanced Scientific Computing Research and how we support it.

Introducing Frontier

U.S. scientists and collaborators have a powerful new instrument at their disposal—the Nation's first exascale supercomputer. In June, 2022, the international Top500 list of most powerful systems in the world named the Department of Energy (DOE) Office of Science system Frontier the world's fastest supercomputer.

Located at DOE's Oak Ridge National Laboratory, Frontier is a collaboration between DOE and U.S. technology companies HPE and AMD. This milestone marks the beginning of the long-awaited exascale era, following more than 10 years of research and development by the nation's brightest minds—not only for Frontier but other upcoming DOE exascale systems.

Exascale systems will provide the next-generation of computing desperately needed to understand climate change and prediction, design new materials for energy technologies and fusion reactors, build stronger and more adaptive power grid, develop new Cancer treatments, provide rapid near real-time data analysis for scientific facilities such as light sources, and address challenges in energy, environment, and national security.

ASCR Science Highlights

ASCR Program News

ASCR Subprograms

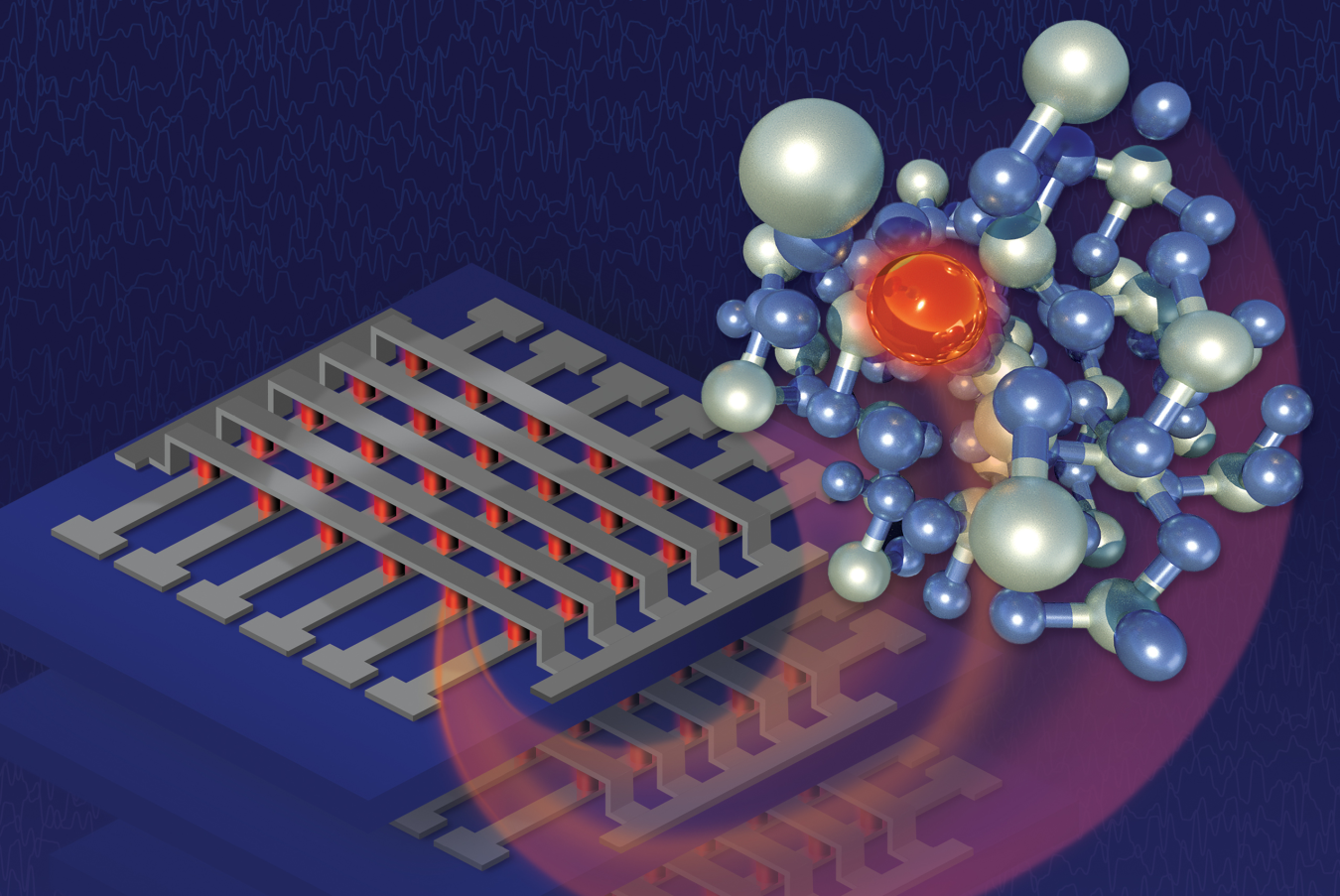

Applied Mathematics Research

The applied mathematics research program develops the key mathematical advances, algorithms, and software for using high-performance scientific computing to solve real-world problems.

Computer Science Research

The computer science research program supports research to enable computing and networking at extreme scales and generate innovative advancements in computer performance.

Computational Partnerships

Our partnership program supports deep collaborations between discipline scientists, applied mathematicians and computer scientists to accelerate scientific computing.

Emerging Technologies

Computer technology is a rapidly advancing field. Emerging technologies are supported through the Research and Evaluation Prototypes program and addresses the challenges of next-generation technologies.

Supercomputing and Network Facilities

Open to researchers from industry, academia, and the national laboratories, ASCR supercomputers are among the fastest in the world and our network is specifically engineered to quickly moving large scientific data.

ASCR Research Resources

Contact Information

Advanced Scientific Computing Research

U.S. Department of Energy

SC-21/Germantown Building

1000 Independence Avenue., SW

Washington, DC 20585

P: (301) 903 - 7486

F: (301) 903 - 4846

E: sc.ascr@science.doe.gov